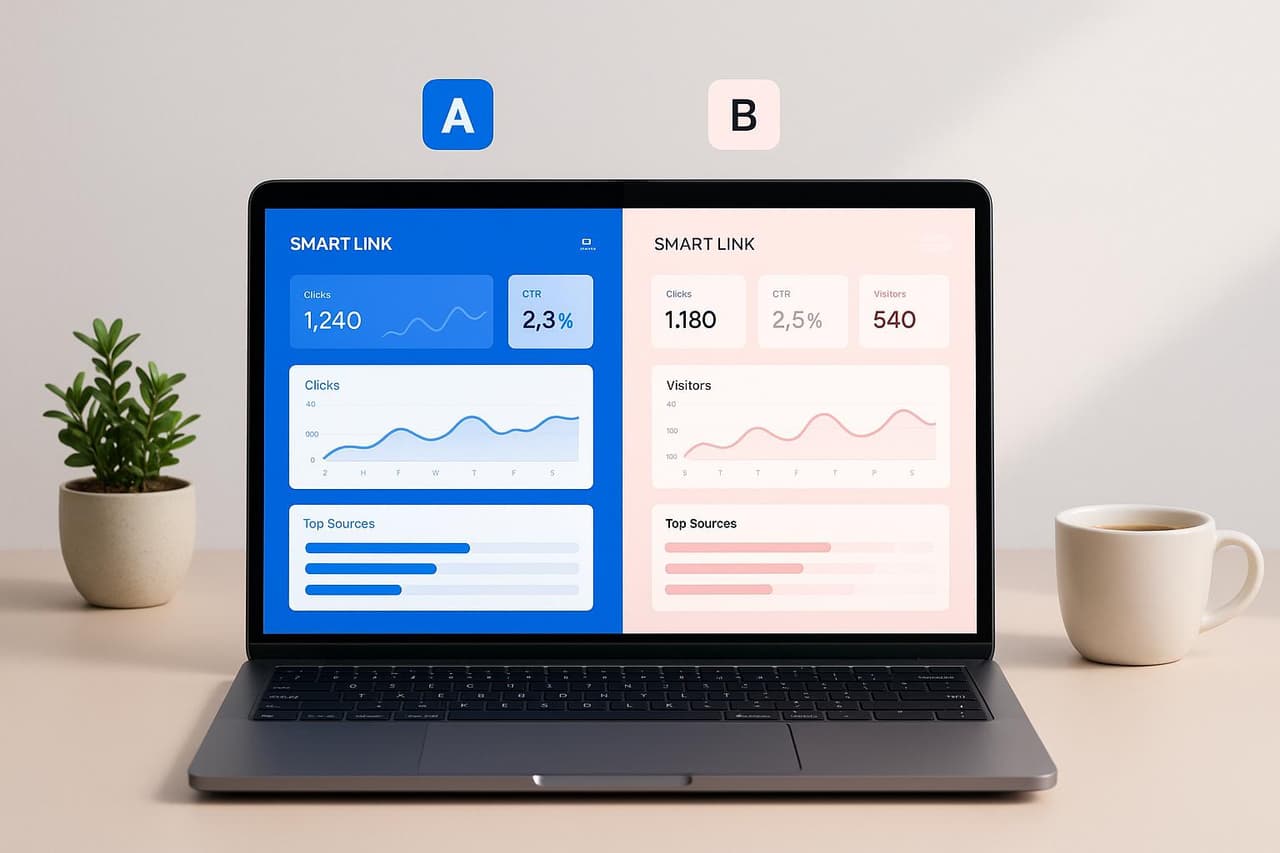

A/B testing for smart links helps marketers test different link variations to improve engagement and conversions. It’s about comparing two versions (A and B) to see which performs better. Smart links add value by automatically directing users to the most relevant destination (e.g., mobile apps or websites) based on factors like device type or location.

Why It Matters:

- Boosts Results: A/B testing can increase conversion rates by 50%, and smart links can improve click-through rates by 30%.

- Data-Driven Decisions: Helps marketers avoid guesswork and focus on what works.

- Real-World Wins: Companies like Going and Campaign Monitor have seen triple-digit growth and recovered users through strategic A/B tests.

How to Start:

- Set Clear Goals: Define KPIs like conversion rate, click-through rate, or revenue.

- Use UTM Parameters: Track performance with consistent, clean UTM codes.

- Choose Tools: Platforms like PIMMS simplify testing with built-in analytics, app redirection, and shared dashboards.

- Create Variations: Test different landing pages, CTAs, or app vs. website destinations.

- Monitor Metrics: Focus on click-through rates, conversions, bounce rates, and revenue.

Quick Comparison of A/B Testing Steps:

By following these steps, you can optimize your smart links and improve campaign performance with actionable insights.

How to Prepare for A/B Testing

Set Clear Goals and KPIs

Before diving into A/B testing, it's crucial to define what success looks like. The metrics you track should align with your hypothesis and broader business objectives. Without clear goals, your test results might lack direction and focus.

Start by pinpointing your primary objective. Are you aiming to increase revenue, improve user engagement, or cut down on cart abandonment? Once you've nailed this down, pick KPIs that directly tie into that goal.

"Connecting your goals and project guarantees you consistently choose KPIs that make a real difference." - Chinmay Daflapurkar, Digital Marketing Associate, Arista Systems

For instance, if you're focused on reducing cart abandonment in an e-commerce setting, you might track conversion rates and revenue per user. On the other hand, a SaaS company could prioritize metrics like sign-up rates or user engagement.

It's also helpful to look at both quantitative and qualitative data. Imagine you're testing a website redesign. While your primary KPI might be conversion rate, secondary metrics like time on page and bounce rate can give you a fuller picture of user behavior [3].

When setting targets, keep industry benchmarks in mind. For example, the median conversion rate across industries is 4.3% [2]. While this can serve as a baseline, remember that performance varies by sector, so set goals that make sense for your niche.

Once you've outlined your goals and KPIs, make sure your tracking parameters are set up to capture every detail of your test.

Set Up Tracking Parameters

Accurate tracking is the backbone of any successful A/B test. UTM parameters, which are added to URLs, allow you to measure how different test variations perform.

There are five key UTM parameters you should know: source, medium, campaign, term, and content. Each one plays a role in identifying where your traffic is coming from and how it's interacting with your test. Fun fact: businesses that effectively use UTM parameters see 20% higher conversion rates [4].

Stick to consistent, lowercase naming conventions to avoid messy data. For example, use "spring_sale" instead of "Spring Sale!" to keep your tracking clean and reliable.

Let’s say you’re running an email campaign for a spring sale. You could create a UTM code like this to track clicks and purchases:

https://yourwebsite.com/?utm_source=email&utm_medium=email&utm_campaign=spring_sale&utm_content=button_link.

Before launching your campaign, always test your UTM links. A broken link can derail your entire test, costing you both time and money. Tools like UTM builders can help you create error-free links systematically.

Once your tracking is in place, it’s time to select the right tools to manage and analyze your test data.

Choose the Right Tools for Smart Links

The right tools can make or break your A/B testing process. Look for a platform that combines link management with robust analytics, so you don’t have to juggle multiple systems.

PIMMS is an excellent example. It simplifies smart link A/B testing with features like Smart Deep Links for directing users to the best destination, Custom Domains for maintaining brand consistency, and a built-in UTM Builder for standardized tracking. Its real-time dashboard integrates with platforms like Stripe and Shopify, ensuring your test data directly ties to revenue outcomes.

For more advanced testing, PIMMS offers multi-variant testing and detailed analysis through its Business plan [5].

"Revenue per user is particularly useful for testing different pricing strategies or upsell offers. It's not always feasible to directly measure revenue, especially for B2B experimentation, where you don't necessarily know the LTV of a customer for a long time." - Alex Birkett, Co-founder, Omniscient Digital

🔗 "AB Testing Made Easy: Reusing Experiment Links for Faster Results!" 🚀

How to Set Up A/B Tests with Smart Links

Once you've set your goals and tracking parameters, it's time to create multiple test variations. This step builds directly on your earlier setup, ensuring the test runs smoothly and delivers actionable insights.

Create Smart Link Variations

After defining your tracking setup, the next step is to create test variations that align with your key performance indicators (KPIs). These variations should focus on boosting user engagement by introducing clear, measurable differences between test versions. Small tweaks often fail to provide meaningful insights, so think big: test things like different landing pages, call-to-action buttons, or whether users prefer being directed to mobile apps versus websites.

"A/B Testing provides data-driven insights to optimize web development and digital marketing efforts by showing different versions to different user groups and analyzing real user interactions. It helps companies make informed decisions, moving beyond intuition and utilizing statistical significance." [6] - Ali E. Noghli, Product Designer, Google UX Certified, HCI specialist, SaaS & MIS, Accessibility Enthusiast, Design System Evangelist

PIMMS makes this process easier with its built-in A/B testing feature. You can create multiple variations of a single smart link, each pointing users to a different destination. For example, the Smart Deep Links feature allows you to test whether users respond better when directed to a mobile app or a website.

When designing your variations, consider tailoring them to specific devices. Desktop users might appreciate detailed product pages, while mobile users often prefer simplified, action-oriented layouts. PIMMS lets you manage these device-specific variations within a single campaign, keeping everything organized and efficient.

Configure Traffic Distribution

Distributing your traffic correctly is key to achieving statistically valid results. You can split traffic evenly or assign custom weights depending on your goals. PIMMS supports both manual and automated optimization based on real-time performance data.

PIMMS also allows you to refine traffic distribution by user location and device type. When planning your distribution, keep your traffic volume in mind - higher volumes allow for more precise splits, while smaller volumes may require longer test durations to gather reliable data.

Check Setup and Integration

Before launching your test, double-check everything to avoid costly mistakes. Test each variation manually across devices and browsers to ensure they work properly. Use analytics tools to confirm UTM tracking is functioning as expected, and verify that fallback behaviors are set up correctly.

PIMMS simplifies this process with a real-time dashboard. This tool lets you monitor click distribution and track conversion events as they happen. Integrations with platforms like Stripe and Shopify ensure your revenue data is captured accurately from the start.

Document every detail of your test setup, including variations, traffic distribution, and success criteria. With everything verified, you can confidently monitor your test in real time and use the data to guide your next optimization efforts.

It starts here

If you made it this far, it's time to grab 10 free links.

10 smart links included • No credit card

How to Monitor and Analyze Test Performance

Once your A/B test is live, keeping a close eye on the metrics is essential to identify which variation performs better. Primary metrics will tell you if the test meets its goals, while secondary metrics provide additional context to understand the results more fully.

Key Metrics to Monitor

The metrics you choose should directly reflect your test hypothesis and align with your business objectives.

"The A/B testing metrics you need to track depend on the hypothesis you want to test and your business goals." [1] - Contentsquare

Here are some important metrics to track:

- Click-through rate (CTR): This measures the percentage of users who click on your smart link compared to total impressions. It’s a great indicator of how well your link grabs attention across various channels and audiences.

- Conversion rate: This tracks the percentage of users who complete the desired action after clicking - whether it’s making a purchase, signing up for a newsletter, or downloading an app.

- Bounce rate: This shows the percentage of visitors who leave immediately without taking further action. A high bounce rate often signals a disconnect between what the link promises and the experience users find after clicking.

- Average session duration: This metric reveals how long users engage with your content, helping you gauge whether your smart link leads to relevant and engaging destinations.

For e-commerce campaigns, additional metrics come into play:

- Average order value (AOV): This tracks the average amount customers spend per transaction, helping you determine if certain variations attract higher-value customers or encourage larger purchases.

- Revenue tracking: This connects your test directly to your bottom line, showing which variations drive higher sales revenue.

These metrics integrate seamlessly into PIMMS's real-time analytics, which we’ll explore next.

Using Real-Time Analytics in PIMMS

PIMMS

PIMMS

Real-time analytics are vital for fine-tuning your smart link strategy. PIMMS provides live dashboards that track clicks, leads, conversions, and sales, giving you instant insights into performance across different segments.

With its advanced filtering system, you can break down data by UTM parameters, traffic sources, devices, countries, and campaigns. This level of segmentation helps uncover trends that aggregate data might obscure. For example, mobile users might respond better to app-directed links, while desktop users prefer web-based destinations. Similarly, certain geographic regions may show higher conversion rates with specific variations.

PIMMS also allows device-specific tracking, letting you see how smart links perform when redirecting users to mobile apps versus websites. Plus, integrations with tools like Stripe and Shopify ensure that revenue data flows directly into your analytics, tying link performance to real sales figures.

The platform’s shared dashboard feature is especially helpful for collaboration. It lets team members and clients monitor progress without needing full access to your account. This transparency keeps everyone informed and promotes decisions based on actual data rather than assumptions.

Compare Results Between Variations

Using the real-time data from PIMMS, you can compare variations to identify statistically significant differences. This step is crucial for distinguishing genuine performance improvements from random fluctuations.

Segment your analysis by user behavior and demographics to avoid misleading conclusions. Different user groups often react differently to the same variations. For instance, returning customers might prefer direct app links, while new users may need more context before committing to a download.

Visual tools like side-by-side comparisons in tables or dashboards make it easier to spot trends. Don’t just focus on conversion rates - examine the entire user journey, from the initial click to the final conversion. A variation with a lower click-through rate but a higher conversion rate might indicate better-qualified traffic.

Time-based analysis can also reveal patterns. Some variations may perform better on weekdays versus weekends, or their effectiveness might change as the test reaches different audience segments over time.

Finally, document unexpected findings, even if they come from secondary metrics or unanticipated behaviors. These insights can inspire future tests and help you refine your overall strategy for using smart links effectively.

How to Interpret Results and Optimize Campaigns

Once you've gathered data from your monitored metrics, the next step is turning those numbers into actionable insights. This means carefully analyzing the data to ensure your decisions are based on real performance trends, not random chance.

Determine Statistical Significance

Statistical significance helps you figure out whether the differences between your smart link variations are meaningful or just random noise. Start by defining a hypothesis and a null hypothesis. For example, your hypothesis might be that smart links opening directly in mobile apps will boost conversion rates by 15% compared to links redirecting to websites. The null hypothesis would state there’s no difference between the two.

Most marketers use a significance level (alpha) of 0.05, which means they’re willing to accept a 5% chance of incorrectly concluding one variation performs better. This typically translates to aiming for 95% statistical significance, though a 90% threshold is sometimes acceptable [8][9]. The p-value is your key metric - it indicates the likelihood that your results happened by chance [7]. If your p-value is below your alpha level, you can confidently say the results are statistically significant.

PIMMS’s analytics dashboard simplifies this by providing the sample sizes and conversion data you need to calculate significance. You can also use standard tests like Chi-square tests for categorical data (e.g., clicks versus no clicks) or Z-tests for comparing conversion rates between variations [7]. Just remember - rushing tests or using small sample sizes can lead to unreliable conclusions.

Once you’ve confirmed statistical significance, it’s time to apply these insights to your campaign.

Implement Winning Variations

After identifying the top-performing variation, roll it out strategically. Start with a phased rollout to minimize risks - gradually exposing more traffic to the winning variation lets you monitor for any unexpected issues. In PIMMS, update your smart link configurations to reflect the winning elements. For example, if branded short links with specific call-to-action text perform better, make those changes across your campaigns. PIMMS’s tracking tools ensure proper attribution as you scale.

Keep an eye on secondary metrics, like session duration and bounce rate, to confirm the winning variation maintains overall engagement. If you notice negative trends in these metrics, weigh the trade-offs against your broader business goals.

Document every change and track post-implementation performance. PIMMS’s real-time analytics make it easy to spot any performance dips and adjust as needed. As you integrate the winning variation, continue refining your strategy by analyzing the results of each experiment.

Best Practices for Continuous Improvement

A/B testing isn’t a one-and-done process - it’s an ongoing effort to better understand your audience. For each test, document your hypothesis, the elements you changed, test duration, traffic volume, results, and conclusions [10]. This record-keeping helps avoid repeating past mistakes and highlights patterns across multiple tests.

Take advantage of PIMMS’s shared dashboard to keep your team on the same page. When everyone has access to test results and insights, it’s easier to apply findings consistently across campaigns. Dive deeper by segmenting your analysis based on user characteristics like device type, location, or whether visitors are new or returning. PIMMS’s advanced filtering tools make this easy.

For future tests, focus on areas with the highest potential impact and lowest implementation effort [10]. Start with simple, high-impact changes before tackling more complex experiments. Always test one variable at a time to build a solid foundation for ongoing optimization. This methodical approach ensures you’re continually learning and improving, even from tests that don’t deliver the results you expected.

Conclusion

A/B testing for smart links takes the guesswork out of decision-making and turns it into actionable insights. By systematically comparing different versions of your smart links, you can better understand user behavior and preferences, allowing you to fine-tune your strategies for greater effectiveness [11]. While the process requires careful planning, execution, and analysis, the payoff is clear - 81% of marketers use A/B testing to improve conversion rates [12].

Key Takeaways

Here’s a quick recap of the steps to successful smart link A/B testing:

- Start with clear goals: Define your KPIs, set up tracking parameters, and choose the right tools.

- Focus on one element at a time: Whether it’s your call-to-action text, branded short links, or destination pages, isolating variables ensures cleaner results.

- Ensure proper traffic distribution: Configuring traffic correctly is crucial for statistical validity. Double-check your setup before launching.

- Leverage advanced monitoring tools: Use PIMMS’s real-time dashboard and filtering features to analyze metrics like UTM parameters, device type, location, and campaign performance. This helps you uncover insights about different user groups.

- Validate results with statistical significance: Make decisions based on true performance differences, not random fluctuations.

- Document everything: Record your hypotheses, test elements, results, and conclusions to create a knowledge base for future campaigns.

Remember, even tests that don’t yield the desired results can offer valuable lessons. Treat A/B testing as a continuous process - it’s the key to staying ahead.

Next Steps for Marketers

Ready to put these insights into action? Start with simple, high-impact tests to build confidence. For instance, test whether branded short links with specific call-to-action text outperform generic URLs in driving conversions.

As you gain experience, expand your testing efforts. PIMMS offers flexible plans to support your needs, whether you’re just starting or scaling up. Begin testing today, and see how systematic experimentation with smart links can elevate your conversion rates.

FAQs

How can smart links improve A/B testing in marketing campaigns?

Smart links enhance the effectiveness of A/B testing in marketing campaigns by delivering precise tracking and analytics for each link variation. This gives marketers a clear view of which option drives higher user engagement and better conversion rates.

With real-time insights into metrics like clicks, leads, and sales, smart links empower data-driven decisions to fine-tune campaigns. Plus, they ensure a smooth user experience by automatically guiding audiences to the correct app or platform, boosting engagement and helping to get the most out of your marketing investment.

What metrics should I track to evaluate the success of smart link A/B tests?

To gauge the effectiveness of smart link A/B tests, keep an eye on these essential metrics:

- Conversion Rate: This tells you how many users followed through with the intended action, whether it's completing a purchase, signing up, or any other goal.

- Click-Through Rate (CTR): Shows the percentage of users who clicked on your link, offering a snapshot of engagement.

- Bounce Rate: Reflects the number of visitors who left without interacting further, signaling potential issues with the content or user experience.

These numbers shed light on how users interact with your smart link variations. By diving into this data, you can fine-tune your campaigns to achieve stronger engagement and better outcomes.

How do I ensure my A/B test results for smart links are statistically significant?

When running A/B tests for smart links, it's crucial to ensure your results are reliable. Here's how you can achieve that:

- Set a confidence level: Aim for a confidence level of at least 95%. This ensures there's less than a 5% chance that your results are due to random chance, giving you more reliable insights.

- Get the sample size right: Your test needs enough participants to detect meaningful differences between the variations. Using tools like sample size calculators can help you determine how many participants are required.

- Give it enough time: Don't cut the test short. Allow it to run until you either achieve statistical significance or reach a predetermined time frame.

By sticking to these guidelines, you can trust your A/B test results and make informed decisions to improve your smart link performance.