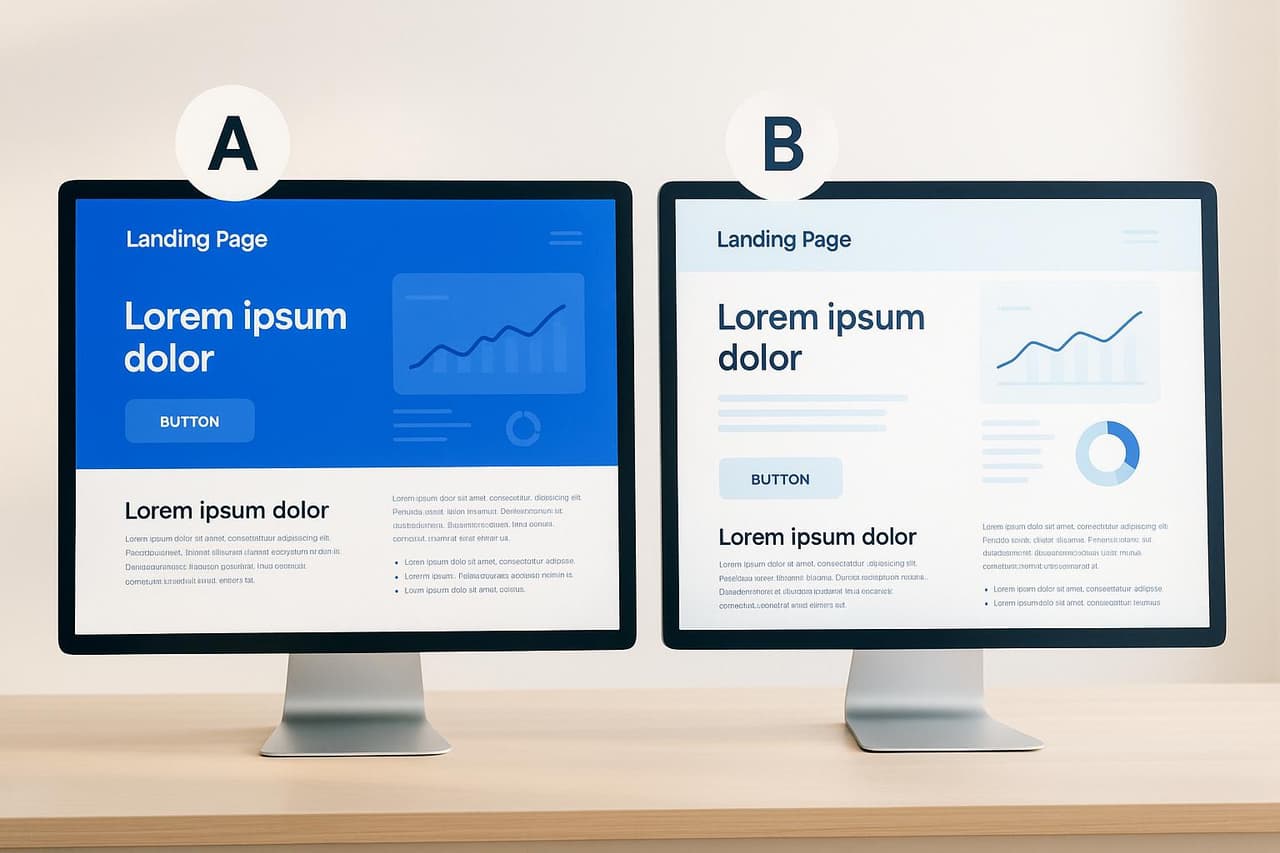

A/B testing helps agencies make data-driven decisions to improve client campaigns. Instead of guessing, agencies can test two versions of a webpage or digital asset to see which performs better. This method boosts conversions, reduces risks, and provides clear ROI for clients.

Key Takeaways:

- What is A/B Testing? Comparing two versions of a digital asset to find the best performer.

- Why it’s important: Helps agencies improve conversion rates, revenue, and client satisfaction.

- How to do it effectively: Use tools for quick setup, test one variable at a time, and ensure tests run long enough to gather reliable data.

- Reporting results: Present clear, client-focused metrics like conversion rates, revenue impact, and ROI.

- Scaling across clients: Adapt successful tests, maintain compliance with privacy laws, and focus on continuous improvement.

A/B testing is not just a one-time task - it’s an ongoing process that helps agencies refine strategies, prove their value, and achieve measurable results for clients.

Setting Up A/B Tests Efficiently

Quick Test Deployment

Launching A/B tests across multiple client accounts doesn’t have to be a time-consuming process. Modern platforms make it possible to get tests up and running in minutes, skipping the need for complex coding or drawn-out setups.

The secret? Tools with user-friendly visual editors and drag-and-drop interfaces. These features empower even non-technical team members to create test variations without writing a single line of code. They can quickly tweak headlines, swap images, adjust button colors, or even restructure entire page sections with simple point-and-click actions [1][2].

"A good A/B testing software can help you optimize conversions for landing pages, websites, campaigns, emails, and more by providing the following benefits: Streamline processes, simplify data analysis, preserve SEO, improve efficiency with automation, discover hidden gems, open up A/B testing for all." - Paul Park, Writer on Unbounce's content team [1]

Pre-designed templates also come in handy, offering ready-made testing frameworks that can be customized to meet specific client needs. This is especially useful when working with similar industries or testing common elements like call-to-action buttons or product page layouts [1].

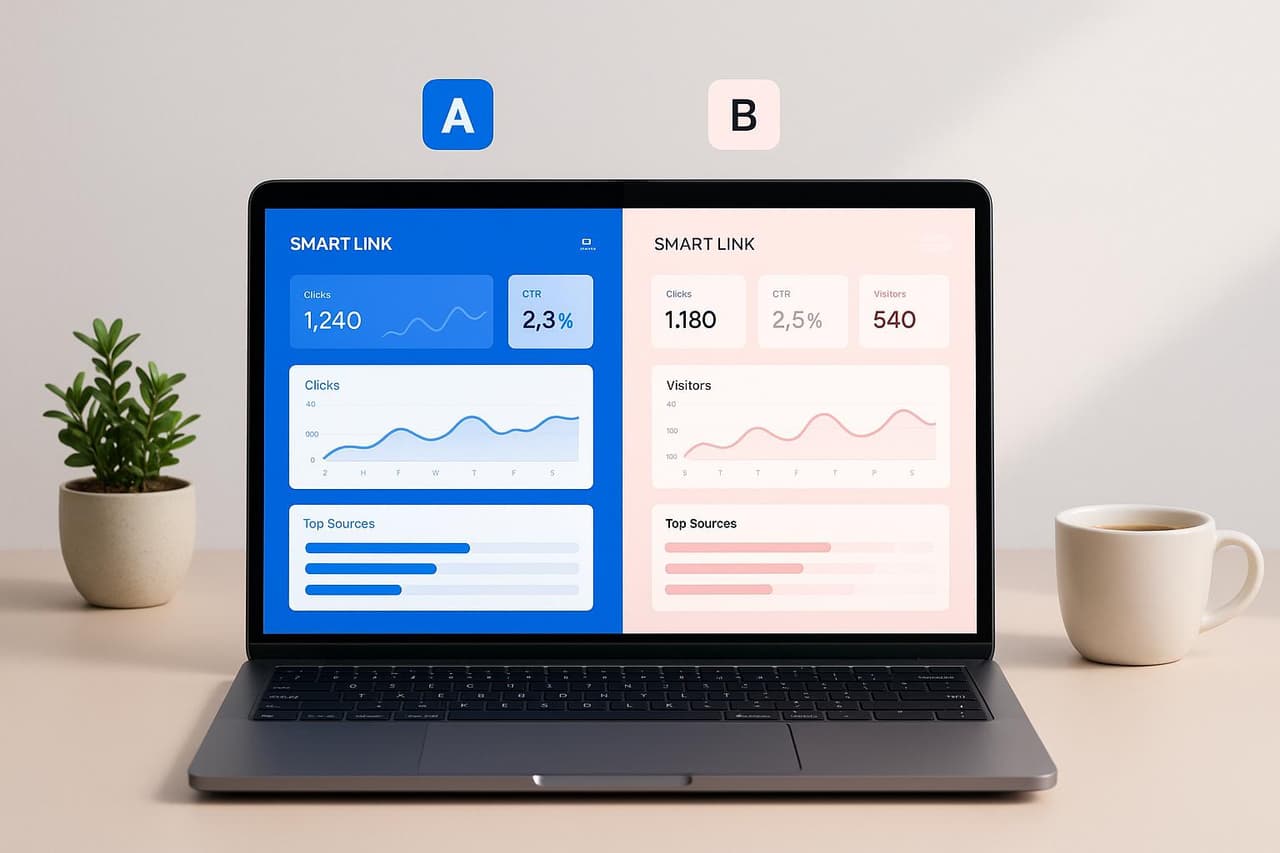

Platforms like PIMMS take things further by combining A/B testing with smart link tracking. For example, agencies can set up tests comparing different landing page destinations while using branded short links to automatically track conversions. With integrations for tools like Stripe and Shopify, conversion data flows straight into your analytics dashboard - no extra setup required.

Advanced targeting features allow for personalized tests based on user demographics, behavior, or traffic sources [1]. This means you can run multiple variations tailored to different audience segments, squeezing more insights out of every test cycle.

For agencies managing high-volume campaigns, cookieless tracking ensures compliance with privacy regulations while maintaining accurate data collection [2]. Once tests are deployed efficiently, the next step is managing them effectively across multiple campaigns.

Managing Multiple Campaigns at Once

Handling dozens of A/B tests across various client accounts can feel overwhelming. The challenge lies not just in running the tests but also in maintaining oversight, avoiding conflicts, and ensuring reliable results from each experiment.

Sequential testing is the most precise method, as it involves running one test at a time per client. While this ensures clean data, it can slow down the overall testing process [6].

On the other hand, mutually exclusive testing strikes a balance. By running multiple tests simultaneously while ensuring users only see one variation, this method increases testing throughput without compromising data accuracy. However, it does slightly reduce the statistical power of individual experiments [6].

For high-traffic clients, overlapping testing can speed up experimentation. While it requires careful coordination to avoid conflicts, this approach allows multiple elements to be tested concurrently without heavily impacting statistical significance [6].

Multivariate testing is another option, allowing you to test multiple variables in a single experiment. While powerful, this method increases complexity and demands larger sample sizes to achieve reliable results [6].

Platforms like PIMMS simplify campaign management with shared dashboards that provide real-time visibility across all client accounts. You can filter results by UTM parameters, traffic sources, devices, regions, and individual campaigns, making it easy to identify which tests are succeeding and which need adjustments.

UTM parameters are particularly helpful for tracking performance across different channels. By using consistent naming conventions, agencies can quickly compare results from email campaigns, YouTube posts, and paid ads [7].

Integration capabilities also streamline operations. By connecting client accounts to analytics tools, CRM systems, and e-commerce platforms, you can create a unified performance view without manual data exports or complex reporting setups. With campaigns under control, the focus shifts to designing tests that deliver actionable insights.

Best Practices for Test Design

Running effective A/B tests isn’t just about tweaking a headline or a button color. The most successful tests follow structured approaches to ensure clear insights and avoid inconclusive results.

Start by testing one variable at a time. This isolates the impact of each change, making it easier to understand what drives performance improvements. A study of 2,732 A/B tests revealed that single-variable experiments provided more reliable insights than those testing multiple elements simultaneously [3].

Prioritize elements above the fold and focus on mobile optimization. First impressions are formed within milliseconds, and with over 63% of global web traffic coming from mobile devices, headlines, hero images, and primary call-to-action buttons should be top priorities for testing [3]. These elements directly influence whether users engage or leave.

Trust-building features can also significantly boost conversions. Display return policies clearly, highlight customer reviews and star ratings, and place security badges near checkout buttons. These elements address common hesitations and can make a notable difference in conversion rates [3]. For example, a skin health supplement brand saw an 80% increase in add-to-cart rates by optimizing its hero section, emphasizing ingredients, and refining call-to-action placement [3].

Before launching any test, create a clear hypothesis. This ensures your tests address real challenges rather than random tweaks. Keep the number of variations manageable - fewer than seven is ideal. Too many variations can dilute traffic across groups, making it harder to reach statistical significance within a reasonable timeframe [4].

Use power calculators to determine the right sample size and run tests for at least one to two weeks to account for traffic patterns. Resist the urge to end tests early, even if initial results look promising [5].

One famous example comes from the Obama campaign, which changed a button text from "Sign Up" to "Learn More." This simple tweak boosted the sign-up rate by 40.6% and brought in an additional $60 million in donations [3].

Monitor your data in real-time to catch technical issues early, but avoid making decisions based on incomplete results. Factors like seasonal events, technical glitches, or unusual traffic spikes can skew outcomes, so keep an eye out for these [5].

Finally, document every insight, recommendation, and action step. This creates a valuable knowledge base for future campaigns, ensuring you build on past successes [5].

ShopX 2025: CRO & A/B Testing | GemPages

Reporting Results and Attribution

Effective testing is only half the battle. The real challenge lies in turning raw data into actionable insights that clients can understand and use to measure ROI. Let’s break down how to create impactful reports, show performance metrics, and prove the value of your work.

Creating Client-Ready Reports

A well-crafted report does more than just present data - it demonstrates your value to the client. It’s your chance to highlight what’s working, address challenges, and strengthen your relationship by aligning insights with their goals.

Tailor each report to the client’s priorities. Speak their language by focusing on metrics that matter most to them. For instance, a retail client might care deeply about cart abandonment rates and average order value, while a SaaS company may zero in on trial-to-paid conversion rates and user engagement.

To make reports digestible, include concise executive summaries, clear visualizations, and brief annotations. Use strategic color highlights and straightforward titles to guide the reader. Tools like PIMMS simplify this process by offering shared dashboards that generate client-specific reports automatically. With its filtering features, you can create tailored views that focus on the campaigns, traffic sources, and metrics most relevant to the client’s objectives.

Don’t just present numbers - add context. For example, instead of simply stating that conversion rates rose by 2.3%, explain how that compares to past performance and industry standards. Adding benchmarks and historical comparisons helps clients see the bigger picture and understand the significance of the results.

Once your reports are customized and clear, the next step is presenting the key metrics that validate your client’s strategy.

Showing Performance Metrics

Performance metrics are the backbone of any report. Highlight primary metrics tied to core business goals, like revenue, conversions, or customer retention. Secondary metrics, such as bounce rates or click-through rates, provide additional context to help interpret the results.

Use US-standard formats to ensure clarity. Display currency as $1,234.56, percentages as 12.34%, and dates in MM/DD/YYYY format. For large numbers, include commas as thousand separators - for example, 1,234,567 visitors or $2,345,678 in revenue.

To calculate revenue impact, use this formula:

Average Order Value × Additional Conversions

Here, additional conversions are determined by multiplying current conversions by the difference between the new and old conversion rates, divided by 100. Tools like PIMMS streamline these calculations by pulling real revenue data from platforms like Stripe and Shopify. Its filtering options allow you to segment results by device, location, traffic source, and campaign, making it easy to pinpoint which audiences respond best to specific strategies.

Time-based comparisons are also essential. Show week-over-week, month-over-month, and year-over-year trends to help clients identify whether improvements are lasting or temporary. Performance scorecards can be a great addition - summarizing key metrics in an easy-to-read format while including details like confidence levels, test duration, and sample sizes. This transparency builds trust and reinforces the rigor behind your recommendations.

These metrics not only provide a snapshot of performance but also lay the groundwork for calculating ROI.

Proving ROI to Clients

ROI is the ultimate proof of value, yet only 38% of marketers effectively demonstrate it through testing programs. That’s what makes a clear ROI calculation a key differentiator.

Here’s the formula for ROI:

ROI = ((Incremental Profit – Experimentation Costs) / Experimentation Costs) × 100

Make sure to include all costs, such as platform fees and labor, and ensure accurate attribution through automatic tracking tools like PIMMS. Attribution modeling is essential - it links test outcomes directly to revenue, from the first click to the final purchase.

"Experimentation has two main value concepts I think of when considering return: validated value and opportunity cost. Validated value is the revenue improvements validated through experiments. Opportunity cost is the revenue loss from experiments that, in a world without testing, would've been in the 'just do it' pile."

- Jess Vandenbruggen, Head of Digital Experience at Drumline Digital [8]

Scorecards are an excellent way to communicate ROI to stakeholders. Each scorecard should include the test hypothesis, implementation costs, revenue impact, and long-term value. This format makes it easy for decision-makers to grasp the financial impact of your work.

When calculating ROI, go beyond immediate results. Consider long-term factors like increased customer lifetime value, improved retention rates, and reduced acquisition costs. Some tests might show modest short-term gains but deliver substantial benefits over time by enhancing the user experience.

Don’t overlook opportunity costs. Highlight the potential revenue losses that could occur without testing. This not only underscores the value of your experimentation program but also shows its role in protecting against missed opportunities.

Establishing ROI benchmarks can help clients understand how much they should invest in testing programs. By showing the relationship between budgets, expected win rates, and projected revenue, you can justify continued investment and set realistic expectations for future returns.

Finally, keep ROI front and center with regular updates. Monthly or quarterly summaries that showcase the cumulative impact of all tests reinforce the ongoing value of your work and provide compelling evidence for expanding the program.

It starts here

If you made it this far, it's time to grab 10 free links.

10 smart links included • No credit card

Growing and Scaling A/B Testing Across Clients

Once you've established effective reporting and ROI practices, the next step is scaling your A/B testing efforts across multiple clients while maintaining high standards. Successful agencies don’t just run isolated tests - they create structured systems that deliver ongoing value over time.

Continuous Testing for Better Results

Top-performing agencies view A/B testing as a continuous process rather than a series of isolated experiments. This approach not only strengthens client relationships but also drives consistent campaign improvements.

Keep a detailed record of every test. Document hypotheses, methodologies, results, and key takeaways in a centralized system. This avoids redundant work and helps uncover patterns across clients and industries. For instance, if a specific call-to-action works well for e-commerce clients, you might adapt it for new retail accounts.

Set clear objectives for each test and align them with broader business goals [11]. Avoid random tests by building on prior results. For example, if urgency-based messaging boosted conversions by 15% for one client, consider testing similar messaging for a related industry.

Stick to testing one variable at a time for precise results. While single-variable tests may take longer, they produce cleaner data that can be applied confidently to other campaigns. Multi-variable tests often yield mixed results that are harder to replicate across different accounts.

A great example of this is AgencyAnalytics' Google Ads experiment. They split traffic evenly between their current strategy and a variant using Target CPA settings. Over 30 days, the variant achieved a 20% higher click-through rate and a 123% jump in conversions. These clear results allowed them to confidently apply the changes across the entire campaign [11].

When deciding what to test, use prioritization frameworks to focus on high-impact opportunities [9]. While popular methods like PIE (Potential, Importance, Ease) and ICE (Impact, Confidence, Ease) are helpful, they can be subjective. The PXL framework, which asks specific questions about the changes being tested, offers a more objective way to compare opportunities across client accounts [9].

"Data is the antidote to delusion"

- Alistar Croll and Benjamin Yoskovitz [9]

Let the data guide your next steps, not assumptions. As you refine your testing methods, applying these insights to new client segments becomes second nature.

Scaling Successful Campaigns

Scaling successful tests across clients requires a balanced approach that ensures both efficiency and customization. The goal isn’t to copy and paste but to adapt proven strategies to fit different contexts.

Look for recurring success patterns in your tests. Whether it’s a particular design tweak, messaging style, or user experience improvement, these patterns can help you prioritize which tests to run for new clients.

Develop adaptable test templates. Include the hypothesis, key variables, success metrics, and implementation steps. These templates streamline deployment while maintaining consistency.

Segment clients by industry, audience, business model, or traffic volume. This segmentation helps you identify which successful tests are most likely to work for specific groups.

Tools like PIMMS make scaling easier by offering advanced filtering and unified dashboards. For example, you can break down results by device, location, traffic source, and campaign. If mobile users in a certain region respond better to a specific link format, you can quickly apply those insights to similar segments.

Maintain statistical rigor when scaling. Ensure each new implementation has enough data and runs long enough to produce reliable results. Just because a test worked for one client doesn’t guarantee it will for another. Treat each scaled test as a new experiment with proper measurement protocols.

When a test proves successful, integrate its winning elements into broader strategies. For instance, update call-to-action buttons, redesign website templates, or adjust default campaign settings across your client accounts. This ensures the improvements have lasting impact [10].

Maintaining Privacy and Compliance

Privacy regulations are a critical consideration for A/B testing. By the end of 2023, 75% of the global population will have their personal data protected under modern privacy laws [14]. While scaling tests can expand your reach, robust privacy practices are essential to safeguard client data.

Most A/B tests don’t require Personally Identifiable Information (PII), which simplifies compliance [12]. Focus on using behavioral data, conversion metrics, and aggregate performance indicators instead of tracking individuals. This reduces privacy risks while still providing actionable insights.

Regularly audit your testing processes to ensure compliance [12]. Create a checklist that includes identifying applicable laws (like GDPR, CPRA, PIPL, and LGPD), reviewing your tools, and updating your data-handling practices. Perform these audits when onboarding new clients, especially those in regions with specific regulations.

"GDPR isn't a tedious compliance issue that costs time and money; it is an opportunity to strengthen your consumer audience, provided you have the right optimization programs in place."

- André Morys, KonversionsKRAFT [14]

Minimize data collection by adopting a "verify not store" approach [13]. Gather only the data necessary for your tests and delete unnecessary information based on retention policies.

Transparency builds trust. Always get explicit consent for data collection, use secure systems, and ensure data is only used for its intended purpose. Make it easy for users to opt out or manage their preferences [13].

"One of the assumptions that people have about healthcare companies is that we're going to be trustworthy and reliable. Because HIPAA exists, that's implicit. We also found that consumers give more data use latitude to a healthcare company if it helps them. So it puts the onus on the healthcare company to ask, 'Is what I'm doing helpful for the consumer?' It should never just be helpful to you."

- Marc Schwartz, Providence Health and Services [14]

Use technical safeguards like Zero Trust architecture, tokenization, and pseudonymization to protect data. Regularly check data integrity and consider immutable storage solutions for critical data [13].

Lastly, remember that cookie regulations, such as those from the ePrivacy Directive, and policies from Apple, Mozilla, and Google, can affect your ability to gather data for testing [12]. These restrictions should be factored into your testing plans and client communications.

PIMMS addresses many of these challenges with privacy-conscious features, ensuring compliance while still supporting effective A/B testing and reporting.

Conclusion: Getting Results with A/B Testing

When it comes to A/B testing, a well-rounded approach is what drives consistent results. For agencies, success lies in smooth workflows, open communication with clients, and scaling strategies that make sense.

One of the biggest game-changers? Automation. Automated A/B testing tools handle tasks like audience segmentation and data analysis, freeing up your team to focus on crafting winning strategies. This becomes crucial when managing multiple client accounts while aiming for reliable results, like maintaining a 95% confidence level across all tests [15]. But automation alone isn’t enough - it needs to be guided by a clear plan.

"A team without an A/B testing roadmap is like driving in a thick fog. It's much more difficult to see where you're going. The bigger the program and organization, the more chaos ensues." – Haley Carpenter, Founder of Chirpy [16]

A solid roadmap cuts through the chaos, turning scattered efforts into focused campaigns. Pairing this roadmap with prioritization frameworks like PXL or ICE ensures your team zeroes in on opportunities that deliver the most impact [16].

Another cornerstone of success? Client reporting. Transparent, data-rich reports are the backbone of strong client relationships. These reports should go beyond surface-level metrics, showcasing ROI through conversion rates, customer lifetime value, and revenue attribution. With only one in seven A/B tests typically yielding a clear winner [18], being upfront about both wins and lessons learned builds trust and credibility over time.

Scaling A/B testing requires a balance between efficiency and personalization. Agencies often rely on adaptable test templates and replicate successful strategies across different client segments. Advanced filtering tools help refine insights by isolating metrics like device type, location, and traffic source. This approach not only simplifies internal processes but also delivers actionable client-specific recommendations.

The best agencies treat A/B testing as an ongoing process rather than a one-off task. They document hypotheses, analyze results, and integrate successful experiments into larger marketing strategies. This ensures that each test contributes to long-term growth, not just short-term wins.

Whether you opt for a centralized team structure for streamlined coordination or a decentralized model for quicker decision-making [17], the secret to success lies in consistency and clear communication. Clients want more than just results - they want to understand the "why" and "how" behind them. By building on past successes and maintaining transparency, every campaign becomes a stepping stone for future growth.

FAQs

How can agencies stay compliant with U.S. privacy laws when running A/B tests?

Agencies conducting A/B testing in the U.S. can stay on the right side of privacy laws by adhering to some essential legal practices. Start by being upfront about data collection - provide clear notices explaining what data is gathered and why. Always secure explicit user consent before collecting any personal information, and avoid gathering personally identifiable information (PII) unless it’s absolutely critical. Whenever possible, anonymize the data to further protect user privacy.

Keep in mind that privacy laws differ from state to state, so staying updated on local regulations is a must. Regularly review legal changes and adopt privacy-conscious testing methods, such as limiting how long data is stored and using secure storage systems. By focusing on transparency and earning users' trust, agencies can run meaningful A/B tests without stepping into legal trouble.

What are the best practices for creating clear and professional A/B testing reports for clients?

How to Create Effective A/B Testing Reports for Clients

When crafting A/B testing reports for clients, focus on presenting clear, actionable insights. Begin with a concise summary of the test's objectives, hypotheses, and key outcomes. Make sure to highlight metrics that directly influence user behavior - like conversion rates or click-through rates - to underline the test's relevance.

Incorporate visual aids, such as charts or graphs, to simplify complex data and make your findings more digestible. Highlight statistically significant results to build credibility and demonstrate the reliability of your analysis.

Your report should also include specific recommendations based on the results. This helps clients understand the next steps and the potential impact these changes could have on their business. Use consistent formatting throughout, sticking to conventions like U.S. date formats (MM/DD/YYYY), dollar symbols ($) for monetary values, and imperial measurements when needed.

A well-organized, professional report not only builds trust but also reinforces your expertise in delivering data-driven results that matter.

How can agencies efficiently manage A/B testing for multiple clients while keeping results customized?

Agencies looking to manage A/B testing across multiple clients more efficiently can rely on automation tools. These tools simplify the entire process - handling test management, generating reports, and tracking performance - saving valuable time and cutting down on manual work. This allows teams to shift their focus to creating tailored solutions for each client.

To keep things personalized, it’s essential to use a hypothesis-driven approach and carefully segment audiences. This ensures that every test aligns with a client’s specific objectives while still being part of a scalable system. By blending automation with smart planning, agencies can achieve meaningful results without sacrificing quality or the personal touch clients expect.