A/B testing your landing pages is the best way to find out what works - and what doesn’t. Instead of guessing, you use real user data to improve conversions, engagement, and overall performance. Here’s how to get started:

- What is A/B Testing? Test two versions of a landing page (A and B) by splitting traffic between them. Change one element at a time - like a headline, button color, or image - and see what performs better.

- Why It Matters: Even small changes can make a big difference. Examples: A button color change boosted conversions by 21%, and a shorter form increased signups by 13%.

- Key Steps:

- Set Goals: Focus on one objective, like increasing signups or purchases.

- Create a Hypothesis: Use data to predict what change will improve results.

- Choose Elements to Test: Headlines, CTAs, images, forms, or trust signals.

- Run the Test: Split traffic evenly, track metrics, and wait for enough data.

- Analyze Results: Look for statistically significant improvements before making changes.

Pro Tip: Tools like Google Analytics or Optimizely can simplify tracking and analysis. Focus on high-impact areas like CTAs and headlines, and always test on mobile devices.

A/B testing isn’t a one-time fix - it’s an ongoing process. Start small, learn from each test, and keep optimizing to get the most out of your landing pages.

How to Do A/B Testing With Landing Pages: A Complete Guide

Planning Your A/B Test

Good planning is the backbone of any successful A/B test. Without a clear strategy, you risk wasting time and resources, ending up with data that doesn't lead to actionable insights.

Set Clear Goals and Pick Key Metrics

Every A/B test should have a specific and measurable objective. Vague goals lead to confusion and make it hard to assess success. Instead, pinpoint exactly what you want to achieve and how you'll measure it.

Focus on a single goal - whether that's boosting email sign-ups, increasing product purchases, or improving click-through rates on your call-to-action (CTA). Zeroing in on one objective helps simplify your analysis and ensures your test has a clear purpose.

Next, pick the metric that aligns with your goal. For example, if you're testing an e-commerce landing page, you might track the percentage of visitors who add items to their cart or complete a purchase.

"You need to include enough visitors and run the test long enough to ensure that your data is representative of regular behavior across weekdays and business cycles."

- Michael Aargaard, CRO Expert [1]

Setting a SMART goal can help you stay focused. For instance, instead of saying, "improve mobile conversions", aim for something like, "increase mobile conversion rate by 15% within 30 days." This approach makes it easier to measure progress and determine success. Consider what level of improvement would meet your expectations, such as a weekly increase in conversions [2].

Create Measurable Hypotheses

A strong hypothesis is the foundation of any meaningful test. It should clearly outline the problem, propose a solution, and predict the outcome. Importantly, your hypothesis should be based on data - not guesswork. Tools like Google Analytics, heat maps, customer surveys, or user testing can help you identify real pain points.

For example, if your analytics reveal high bounce rates on mobile devices, you might hypothesize that simplifying the mobile layout will reduce those bounces. A real-world example of this approach involved a company offering a free ebook. When they realized visitors might be deterred by the perceived reading time, they updated the copy to highlight that the ebook could be read in just 25 minutes. This small tweak had a big impact on download rates [7].

To get reliable results, focus on testing one variable at a time. If you change multiple elements - like a headline and a button color - at once, it becomes impossible to know which change influenced the outcome. For instance, if you're deciding between two headline options and two button colors, run separate tests for each to pinpoint what works best.

Choose Which Elements to Test

Not every part of your landing page carries the same weight when it comes to conversions. Focus your testing on the elements most likely to influence user behavior and drive results.

-

Headlines: A strong, clear headline can make a huge difference. In fact, landing pages with compelling headlines have been shown to boost conversion rates by up to 300% [11]. Experiment with different ways to present your value proposition or appeal to your audience's emotions.

-

Call-to-Action Buttons: Small tweaks to your CTAs can lead to big results. For example, personalized CTAs perform 202% better than generic ones [11]. Test variables like button color, size, text, or placement. MOZ increased monthly leads by 148% by refining their CTA copy [9], while Going saw a 104% jump in trial sign-ups by testing "Sign up for free" against "Trial for free" [8].

-

Forms: Forms are critical for capturing leads. Experiment with different lengths, field types, or layouts. Multi-step forms, for instance, can make complex sign-ups feel more manageable.

"At Apexure, we use multi-step forms to gently nudge users into completing them without feeling overwhelmed. We start with a simple question, leveraging the 'foot-in-the-door' technique. This makes it easy for users to interact with the form, increasing the likelihood of continuing to the end."

- Waseem Bashir, Founder & CEO, Apexure [10]

-

Images and Videos: Visual elements play a key role in engagement. Test different types of visuals - like product shots versus lifestyle images, or videos versus static content - to see what resonates most with your audience.

-

Copy Length: The amount of text on your landing page can also influence conversions. For example, Crazy Egg saw a 363% increase in their conversion rate by switching from short-form to long-form content [9]. Test whether your audience prefers detailed explanations or quick, concise messaging.

-

Trust Signals: Elements like security badges, testimonials, and guarantees can make a big difference. Trust signals have been shown to boost conversions by as much as 42% [11]. And don't underestimate the power of good design - 94% of first impressions are design-related, so make sure your layout, color scheme, and overall visual appeal are on point [11].

Once you've identified the key elements to test, you're ready to create your test versions and start gathering data.

Running Your A/B Test

Now that you've planned your A/B test, it's time to put it into action. This phase is all about executing your test carefully to ensure the data you collect is both accurate and useful.

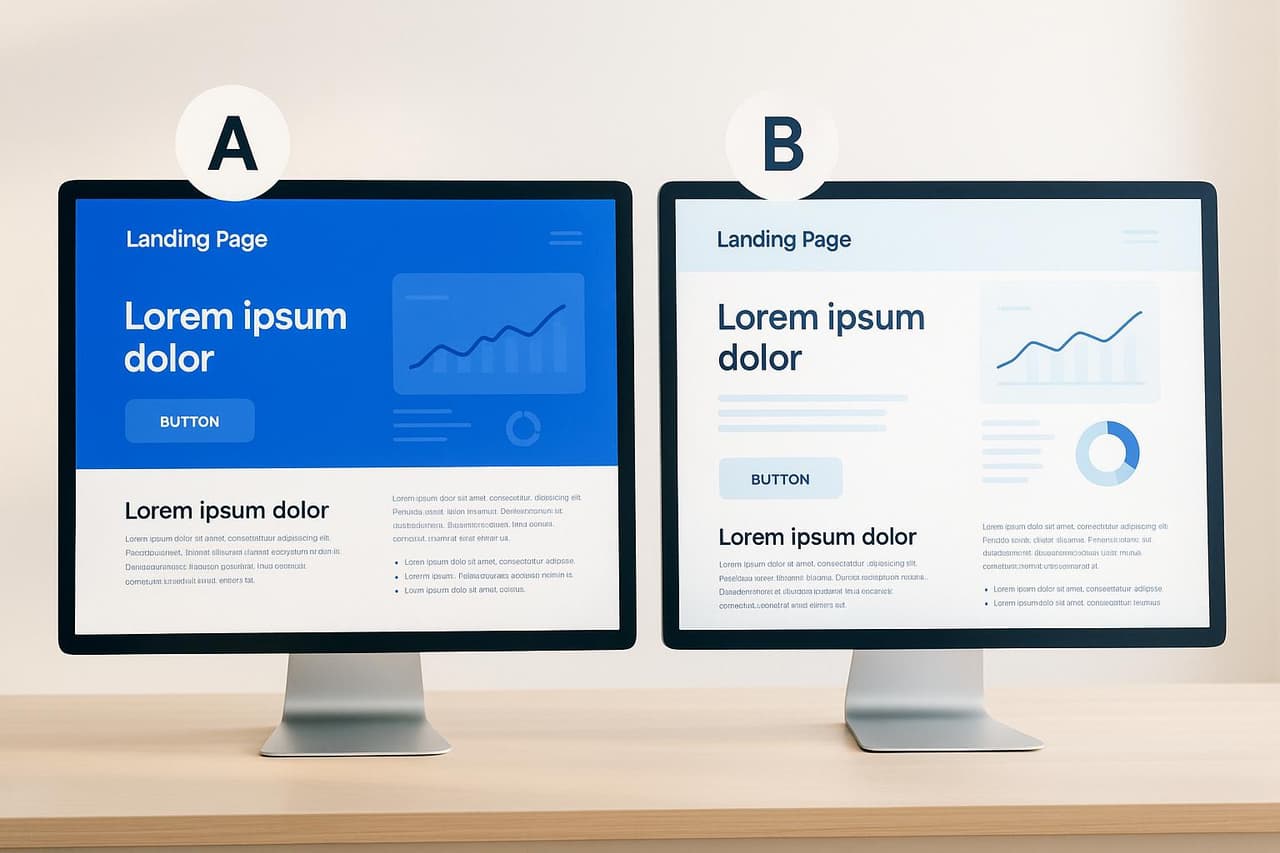

Create Your Test Versions

Start by building the versions you'll test. At a minimum, you'll need two: your control version (A) - usually the original landing page - and your test version (B), which includes the specific change you're evaluating.

Keep your changes focused. Adjust only one element at a time so that any performance differences can be directly linked to that change [1]. For example, instead of redesigning the entire page, you might try switching your call-to-action from passive to active language.

Small adjustments can sometimes yield surprising results. Take these examples:

- Leadpages customer The Foundation saw a 28% increase in conversions by simplifying their headline.

- Jae Jun from Old School Value discovered a 99.76% improvement by swapping out a traditional image for a modern one.

- Leadpages user Carl Taylor boosted conversions by over 75% by testing two different photos of himself.

- Author Amanda Stevens achieved better results by changing her eBook landing page headline from "New Book Reveals Rescue Remedies for Retailers" to a more targeted message: "If you're a retailer in need of fresh ideas and proven growth strategies, this book is for you!" [13].

Before launching, double-check that your analytics tools are correctly set up and ensure your test works consistently across all devices and browsers. Once everything is ready, split your traffic and start testing.

Split Traffic and Start Testing

With your test versions finalized, it's time to divide your website traffic. Randomly split visitors so everyone has an equal chance of seeing either version [15]. For two variants, use a 50/50 split; for more variants, distribute traffic evenly [1].

If you're running an established campaign, you might allocate a smaller percentage of traffic to new variants while keeping the majority on your current best-performing version. Here are two common approaches to traffic allocation:

- Manual allocation: Split traffic evenly until there’s a clear winner. This method works well for long-term tests but may expose some visitors to underperforming variations while you gather data [14].

- Automatic allocation (multi-armed bandit): Gradually shift more traffic to the better-performing variation. This is ideal for short-term campaigns with limited timeframes [14].

Once you’ve set your traffic splits, don’t adjust them during the test. Let it run for at least 1–2 weeks to account for daily trends and business cycles [16]. During this time, focus on tracking key metrics to evaluate your hypothesis.

Track Progress and Analyze Data

As your test runs, monitor the metrics you identified in your SMART goals. Pay close attention to indicators like conversion rate, click-through rate (CTR), bounce rate, and average time on page [3]. For example, if your conversion rate improves but your bounce rate spikes, it could mean you're attracting the wrong audience. Scroll depth, ideally between 60% and 80%, can also provide valuable insights when paired with session duration or time on page [17].

Statistical significance is essential for reliable conclusions. Use tests like chi-square or t-tests to determine if the differences in performance are real [3]. Even if you hit 95% confidence, make sure you’ve gathered a large enough sample size before drawing conclusions.

Don’t forget to consider external factors like seasonality, traffic sources, or major events that could skew your results [3]. Tools like Optimizely, VWO, and Google Optimize can help you split traffic and analyze performance effectively.

For instance, NatWest, a retail bank in the U.K., used Contentsquare's Experience Analytics platform to assess its mobile app's savings hub page. They noticed a high exit rate, with users scrolling far down the page before leaving. Suspecting that key information was buried, they tested a revised design featuring a more concise and scannable Fixed Rate ISA card. The result? A noticeable improvement in application completion rates [4].

"A/B testing allows you to test those assumptions, and base decisions on evidence not guesswork."

- Leah Boleto, Conversion Optimization Strategist [15]

Across industries, the median conversion rate sits at 4.3% [17]. Use this benchmark to gauge whether your results are moving in the right direction or if further adjustments are necessary. Let these insights guide your next steps as you refine your landing pages and continue testing.

It starts here

If you made it this far, it's time to grab 10 free links.

10 smart links included • No credit card

Understanding Results and Common Mistakes

Once your test wraps up, the real work begins - interpreting the results and learning from them. Proper analysis is essential to avoid missteps that could lead to incorrect conclusions.

Understanding Statistical Confidence

Statistical confidence determines whether the differences you observe between test versions are real or just random noise. Most marketers aim for a 95% confidence level, meaning there's only a 5% chance that the results occurred by random chance. This equates to a significance level of 0.05, which is the generally accepted threshold for making decisions [18]. However, research shows that only 20% of experiments reach this level of confidence [20].

To ensure your results are reliable, don’t rush. Wait until your test has gathered enough data to meet the required sample size [19]. Tools like A/B testing calculators or statistical software can help determine when your findings are valid [18]. For the best outcomes, aim for 80% test power (which means there’s only a 20% chance of missing a real effect) and stick to the 5% significance level [21]. Once you’re confident in your data, watch out for common errors that could undermine your findings.

Common Testing Mistakes to Avoid

Even the most experienced testers can fall into traps that skew results. Here are some frequent mistakes to watch for:

- Ending tests too soon: Cutting a test short can lead to incomplete or misleading data, increasing the risk of false positives [24].

- Testing too many changes at once: Focus on one variable at a time to pinpoint what’s driving the results.

- Ignoring sample size requirements: Always ensure your sample size is adequate; calculators can help you get this right [23].

- Failing to document tests: Record outcomes from every experiment, even those that don’t succeed. These insights can guide future tests [22].

- Assuming results apply to everyone: Segment users by demographics or behavior to uncover differences in performance [22].

- Chasing vanity metrics: Prioritize metrics tied to revenue or other meaningful business outcomes [25].

- Overlooking downstream impacts: Monitor the entire user journey to identify any unintended negative effects [22].

Keep Testing and Improving

A/B testing isn’t a one-and-done process - it’s a continuous cycle. Use every result, whether it’s a win or a loss, to shape your next experiment. Remember, only about one in seven A/B tests produces a winning result [19].

"Quantity of tests over quality makes sense in many cases - getting 15 cracks at the can to hit a 6% lift is better than waiting for 9 months for a single test to 'be significant.' So I'd urge strategists to think about volume and be okay ending a test as 'no significant impact' and moving onto the next test."

- Shiva Manjunath, Experimentation Manager at Solo Brands [26]

Plan future tests based on their potential impact and ease of execution [27]. Focus on high-traffic pages and elements that directly influence conversions - like navigation menus or calls-to-action - since improving these can have ripple effects across multiple areas of your site [5]. For ideas that require validation before committing to a full-scale test, consider alternative methods like five-second testing, preference testing, or user testing [26].

"A/B testing helps us gain confidence in the change we're making. It helps us validate new ideas and guides decision making. Without A/B testing, we're leaving much of what we do up to chance."

- Des Navadeh, Product Manager of the Jobs team at Stack Overflow [21]

The secret to success lies in staying consistent and strategic. Small, incremental wins add up over time, so don’t wait for a big breakthrough. Keep testing, learning, and improving [22].

A/B Testing Tools and Best Practices

The tools and strategies you choose can determine whether your A/B tests lead to meaningful insights or end up wasting time and resources. Here’s how you can set yourself up for success.

Using PIMMS for A/B Testing

PIMMS

PIMMS

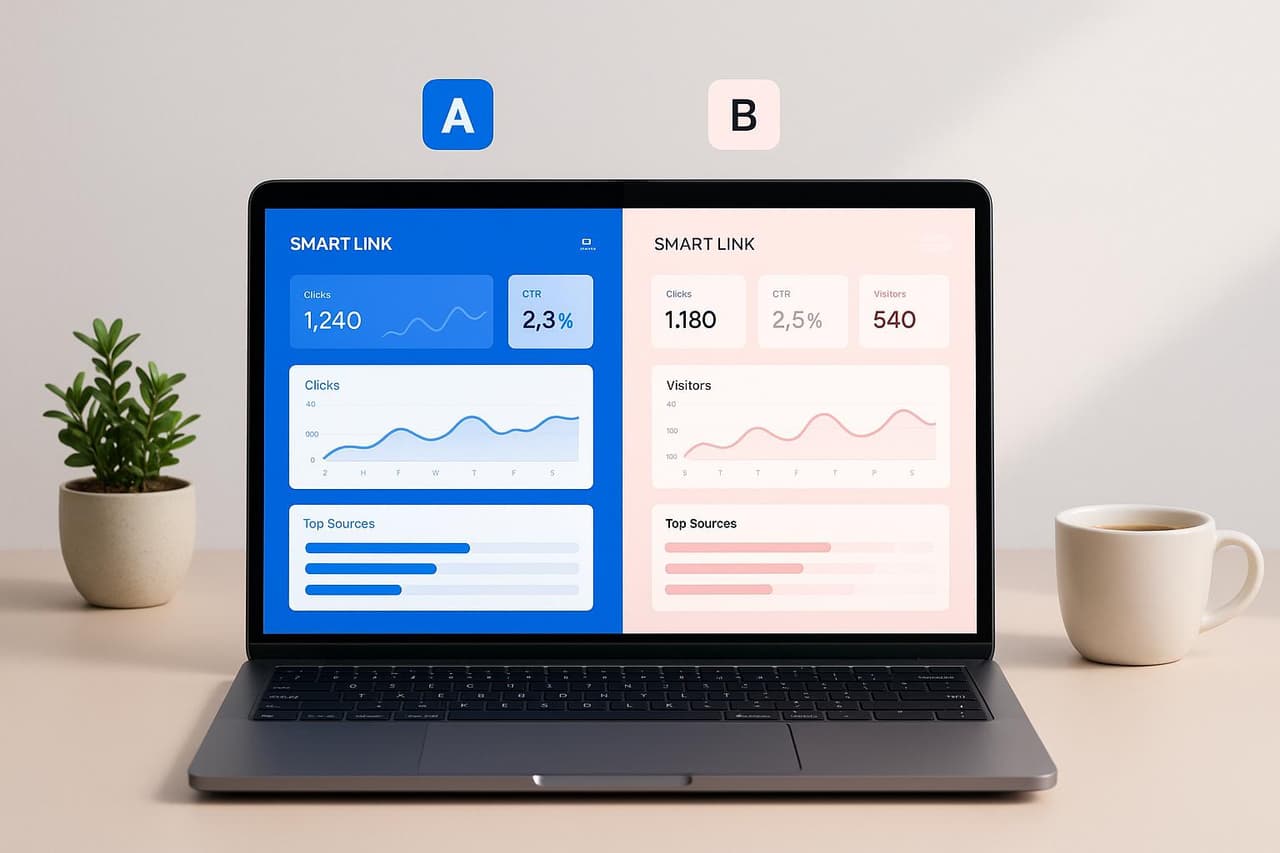

PIMMS offers built-in A/B testing features that make optimizing your landing pages straightforward and effective. With this platform, you can compare multiple versions of your destination pages while tracking the metrics that matter most to your business.

The platform’s real-time analytics dashboard provides insights into clicks, conversions, and revenue. This isn’t just about identifying which version gets more clicks - it’s about understanding which one drives actual revenue. Plus, PIMMS integrates smoothly with tools like Stripe and Shopify, letting you follow the entire customer journey, from the first click all the way to the final purchase.

Setting up tests in PIMMS is simple. You can create multiple variations of a landing page and use its traffic splitting feature to send visitors to different versions. PIMMS automatically tracks performance across key metrics, and you can dig deeper into the data by filtering results based on traffic source, device type, geographic location, or UTM parameters. This helps you see how different audience groups respond to your tests.

The shared dashboard feature is especially handy for team collaboration or client reporting, ensuring everyone has access to the same real-time data and insights.

By combining these capabilities with solid testing practices, you can maximize the impact of your experiments.

Best Practices for Better A/B Testing

Even with a tool like PIMMS simplifying the process, following best practices is essential to get reliable and actionable results from your tests. Here are some proven strategies to consider:

- Focus on one variable at a time. Testing a single change - like a headline or button color - helps you isolate its impact. A study of 2,732 A/B tests found that focusing on one variable leads to more reliable insights [29].

- Prioritize high-impact elements. Key areas like headlines, call-to-action buttons, and hero images often drive the biggest changes in conversions. For example, Swiss Gear revamped its product pages for better clarity and saw a 52% boost in conversions, with seasonal spikes reaching 137% during peak shopping times [29].

- Calculate sample size and run tests over full business cycles. Use free online calculators to ensure your sample size is sufficient, and run tests long enough to capture consistent user behavior [28] [27].

- Optimize for mobile. With mobile users making up a large share of web traffic, ensure your tests perform well on all devices. For instance, Blissy directs Facebook traffic to mobile-optimized landing pages with clean, scrollable layouts and well-placed calls-to-action [29].

- Document everything. Record your hypothesis, changes, test duration, traffic volume, and results, including statistical significance. This creates a reference point for future tests [27].

- Segment your data and track secondary metrics. Break down results by user groups and monitor metrics like time on page, bounce rate, and pages per visit to uncover deeper insights [27].

- Use statistical significance calculators. Before making decisions, aim for at least 95% confidence to ensure your results aren’t just due to random chance [28].

Continuous, incremental improvements are key to long-term success. For example, Clear Within, a skin health supplement brand, increased its add-to-cart rate by 80% by refining its hero section, highlighting key ingredients with icons, and improving call-to-action placement [29]. These changes were based on a deep understanding of their audience and careful testing.

"The concept of A/B testing is simple: show different variations of your website to different people and measure which variation is the most effective at turning them into customers." – Dan Siroker and Pete Koomen [12]

It’s worth noting that 50-80% of A/B tests don’t yield conclusive results due to inadequate data tracking [29]. This isn’t a failure - it’s part of the learning process. Each test, whether successful or not, offers insights that can guide your strategy and help you improve over time.

Key Takeaways

A/B testing your landing pages is a structured way to learn about your audience and improve performance over time. By breaking the process into simple, actionable steps, any marketer can incorporate this approach into their workflow.

A/B Testing Process Summary

The foundation of any A/B test is a solid hypothesis. Craft a clear, focused statement predicting the outcome of your test. This should outline the specific change you're making, the expected impact, and the reasoning behind it based on your current data, analytics, and user feedback [6]. This isn't about guessing - it's about making informed predictions.

Next, create two versions of your landing page: the original (control) and the variation. Split your traffic evenly between them, ensuring each visitor only sees one version. Let the test run long enough to gather sufficient data and reach statistical significance [6]. Once the results are in, analyze the performance, document your findings, and implement the winning version to improve your results [6].

A/B testing takes the uncertainty out of website optimization. Even if a test doesn’t produce a clear winner, the insights you gain will guide future experiments. It’s all about learning what resonates with your audience and applying those lessons.

Start Testing Your Landing Pages

With a clear process in hand, it’s time to start testing. Focus on your highest-traffic or most conversion-critical landing pages first. Use the ICE prioritization framework - Impact, Confidence, Ease - to decide which tests to prioritize [13]. Pages with strong calls to action are a great starting point since their performance is easier to measure.

Remember, small changes add up over time [13]. Each successful test becomes your new baseline (or control) for the next experiment. This iterative approach ensures continuous improvement, helping you get more out of your existing traffic without spending extra on new visitors [30].

The key is to start. Test one variable at a time, document your process, and run tests long enough to see meaningful results. Treat optimization as an ongoing effort - audience preferences evolve, and regular testing ensures your landing pages stay effective.

Currently, about 60% of companies conduct A/B tests on their landing pages [13]. However, the biggest gains go to those who take a systematic, consistent approach. Start with one test, learn from the results, and build from there. A/B testing is one of the most cost-effective ways to boost your marketing performance, so dive in and see what works for your audience.

FAQs

What should I focus on testing first to get the biggest impact from my landing page A/B tests?

To get the most out of your A/B tests, focus on the elements that have a direct impact on user engagement and conversion rates. These often include:

- Headlines: Experiment with different messaging to find what captures attention most effectively.

- Call-to-action (CTA): Play around with placement, wording, or design to see what drives more clicks.

- Visuals: Test various images, videos, or graphic styles to determine what resonates with your audience.

- Page load speed: Faster pages mean fewer people leaving before they even see your content.

- Trust signals: Adjust the visibility of reviews, testimonials, or badges to see how they influence credibility.

Focus on changes that make the user experience smoother, inspire confidence, and encourage action. Dive into analytics and user behavior data to pinpoint weak spots, and test one variable at a time to gather clear, actionable results.

What are the common mistakes to avoid when A/B testing landing pages, and how can I get accurate results?

When running A/B tests on landing pages, there are a few pitfalls you’ll want to avoid. Testing too many changes at once, using a sample size that’s too small, or stopping the test too soon are all common mistakes. These missteps can lead to unreliable data, making it hard to draw meaningful conclusions.

For more accurate results, start with a clear hypothesis and focus on testing just one variable at a time - like the headline, button color, or page layout. Ensure your sample size is large enough to yield statistically reliable data, and let the test run long enough to account for fluctuations in traffic. Resist the urge to end the test prematurely, and keep detailed records of your process to identify what works and what doesn’t.

By sticking to these principles, you’ll be better equipped to make informed, data-backed decisions that can genuinely improve your landing page performance.

How can I tell when my A/B test has enough data to confidently pick a winning landing page?

To confidently pick a winner in your A/B test, you’ll need to focus on three key factors: statistical significance, ample sample size, and consistent performance throughout the test period. Statistical significance is usually reached when the p-value is below 0.05, paired with a confidence level of 95% or more. For sample size, a good benchmark is at least 30,000 visitors and 3,000 conversions per variant, though these numbers can shift depending on your specific objectives.

Make sure your test runs long enough to capture variations in user behavior, like differences between weekdays and weekends. Jumping to conclusions too early can lead to misleading results - so take your time and let the data guide you!