A/B tests need time to deliver reliable results. Ending too early or running too long can lead to flawed decisions. Here’s what you need to know:

- Run tests for at least 2 business cycles (typically 2 weeks) to account for daily and weekly user behavior patterns.

- Traffic matters: High-traffic sites get results faster, while low-traffic sites need longer durations to gather enough data.

- Big changes = quicker results: Testing bold changes (like redesigns) is faster than small tweaks (like button colors).

- Wait for statistical significance (95%) to ensure results aren’t random. Don’t stop tests early, even if one variation looks better initially.

- Seasonality affects results: Avoid testing during holidays or unusual traffic spikes to get accurate insights.

Pro Tip: Use A/B test duration calculators to estimate how long your test should run based on your traffic, conversion rates, and goals. Always plan for full business cycles to capture realistic user behavior.

How long to run an A/B test? CRO & Experimentation

What Affects How Long Your A/B Test Should Run

The duration of your A/B test depends on several factors. Understanding these can help you set realistic timelines and ensure your tests produce reliable insights.

Your Website or App Traffic Volume

Traffic volume is one of the biggest factors in determining test duration. Simply put, high-traffic sites can gather data faster, while low-traffic sites need more time to accumulate enough information for meaningful results.

For example, if your conversion rate is 30% and you're aiming for a 20% improvement, you'll need around 1,000 visits per variation. However, with a 5% conversion rate, that number jumps to 7,500 visits per variation. Testing for smaller changes, such as a 10% improvement, requires even more data - over 30,000 visits per variation. For sites with a 2% conversion rate and a goal of just a 5% increase, the requirement skyrockets to nearly 310,000 visits per variation [5].

"Higher conversion rates and traffic reduce test duration, while low traffic necessitates longer tests." - Shiva Manjunath, Experimentation Manager at Solo Brands [3]

As a general guideline, a simple A/B test needs at least 5,000 visitors per week to the test page and 500 conversions weekly (250 per variation) [6]. If your site generates only a few thousand monthly visits and 100 conversions per variation, testing becomes challenging [7].

For sites with low traffic, consider bold changes that produce noticeable differences. You can also limit the number of variations or track higher-volume goals, like add-to-cart actions, instead of final purchases [6].

Next, let’s look at how the size of the change you’re testing influences test duration.

Size of Change You're Testing

The scale of the change you’re testing directly impacts how long your test will need to run. Small changes, like tweaking button colors or minor copy edits, take longer to detect because they require more data to distinguish meaningful results from random fluctuations.

"The lower the expected improvement rate, the greater the sample size needed to be able to detect a real difference." [2]

Testing small changes means you'll need more visitors, which can extend test durations significantly. On the other hand, larger changes - like redesigning an entire page or overhauling a workflow - are easier to measure and can yield results more quickly [2][3].

"Do bandwidth and MDE calculations and you will know how long your test should run and how big the effect is you can measure (MDE). Knowing how big the effect is that you can measure can also indicate how big of a change you need to make. If your MDE is low (1-2%) you can change text descriptions, if your MDE is high (15%) you should aim for multiple changes at once to reach the effect that you are able to measure." - Lucia van den Brink, Lead Consultant at Increase Conversion Rate [3]

If time is a concern, focus on testing bold hypotheses that have the potential for larger impacts, rather than incremental adjustments.

Statistical Confidence and Sample Size

To trust your test results, you need enough data to ensure they aren’t due to random chance. Statistical significance depends on your current conversion rate, the expected improvement, and the sample size.

Higher baseline conversion rates make it easier to detect changes because they generate more conversions per visitor. Conversely, lower conversion rates require more visitors to achieve the same level of confidence, extending test durations.

The minimum detectable effect (MDE) - the smallest change you want to measure - also plays a critical role. Testing programs often prioritize bold changes because smaller improvements demand much larger sample sizes. This foundational data helps determine how long your test should run.

But numbers aren’t the only factor. Timing matters too, especially when it comes to seasonality.

Seasonality and Business Cycles

Your tests need to account for natural variations in user behavior, including differences between weekdays, weekends, and seasonal patterns. User behavior isn’t always immediate - many people research, compare, and take days or weeks before converting [2].

"The most important element for accurate A/B test results is that you run your tests over at least two full business cycles. This will help account for any seasonality. For example, if you see a predictable conversion trend over a week, you should set the test to last at least two weeks. A shorter period might not include seasonality effects and end with biased results." - Algolia [8]

If your typical purchasing cycle is three weeks but your test only runs for one, the results may not reflect actual user behavior [2]. Similarly, running tests during seasonal peaks can skew outcomes, as temporary traffic spikes might distort the data [9].

To avoid these pitfalls, run tests for full weeks to capture regular behavioral patterns [9]. Steer clear of testing during unusual seasonal events to ensure your results accurately reflect typical user interactions [9]. Factoring in these timing elements ensures your tests align with real-world behavior.

How Long Should A/B Tests Run: Industry Standards

When it comes to A/B testing, timing is everything. Experts in the field have established guidelines for test duration to help ensure reliable outcomes and avoid common pitfalls. Here's a closer look at the recommended timeframes and considerations.

Minimum Test Duration

Most testing professionals suggest running an A/B test for at least one to two weeks. This timeframe accounts for daily fluctuations in user behavior and helps avoid skewed results caused by short test periods [1][2].

Full Business Cycle Testing

To go beyond the bare minimum, a test spanning two full business cycles - typically two weeks - is widely regarded as the industry standard. Why? It smooths out variations in user behavior that occur throughout the week or month and captures delayed conversions from users who take longer to make decisions [2].

Adjusting Test Duration

While two weeks is a solid starting point, the ideal duration can vary depending on several factors. For example:

- High-traffic websites may detect meaningful results more quickly.

- Low-traffic websites often need extended test periods to gather enough data.

- Seasonal campaigns or deadlines might require adjustments to fit specific time constraints [4].

Shiva Manjunath, Experimentation Manager at Solo Brands, emphasizes the importance of tailoring test duration:

"True experiments should be running a minimum of 2 business cycles, regardless of how many variations. However, I don't know how helpful the 'average' time is, because it does depend on a number of factors which go into the MDE (minimum detectable effect) calculations. Higher conversion rates and higher traffic volumes means detectable effects will take less time to be adequately powered, and some companies simply don't have enough traffic or conversion volume to run experiments on sites." [3]

Lucia van den Brink, Lead Consultant at Increase Conversion Rate, offers this advice:

"Cutting down on runtime is not the way to go. If stakeholders want to (pre)validate changes faster you can always suggest other types of research: 5-second testing, preference testing, and user testing." [3]

By planning your test duration carefully and setting expectations with stakeholders upfront, you can capture a more representative range of user behavior. This approach reduces the risk of rushing to conclusions before the data fully matures [1].

Next, we’ll dive into tools that can help pinpoint the optimal end date for your tests.

It starts here

If you made it this far, it's time to grab 10 free links.

10 smart links included • No credit card

Tools to Calculate Your Test Duration

A/B test duration calculators take the guesswork out of figuring out how long you need to run your test to achieve statistical significance. These tools ensure you gather enough data to make your results reliable, helping you avoid one of the most common pitfalls: ending tests too early [2][10].

To use these calculators, you’ll typically need to input a few key metrics: your current conversion rate, desired improvement, number of variations, and average daily visitors [10]. For example, if your website has a conversion rate of 3.2% and you’re aiming for a 15% relative increase, the calculator will factor in your daily traffic and test variations to estimate how many days you’ll need to run the test.

These tools also highlight the connection between traffic volume and test duration. Low-traffic websites often require longer testing periods to collect enough data, while high-traffic sites can reach meaningful conclusions much faster [4].

Now, let’s dive into how PIMMS leverages these insights to fine-tune your A/B test timelines.

How PIMMS Helps Set Test End Dates

PIMMS

PIMMS

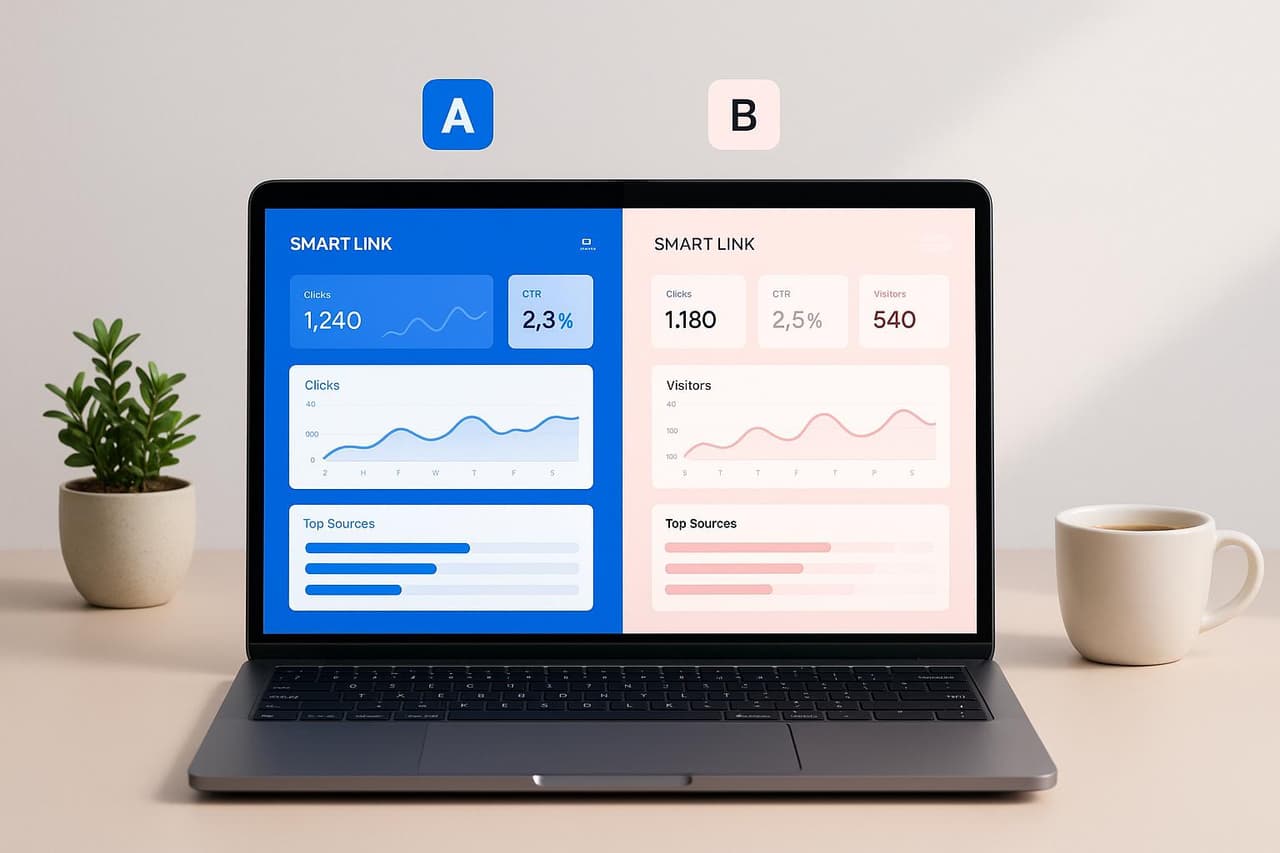

PIMMS simplifies A/B testing by automatically recommending when to end your test based on traffic patterns and statistical needs. When you set up an A/B test, PIMMS analyzes your historical click and conversion data to calculate the ideal test duration.

The platform continuously monitors your test's progress with real-time analytics, ensuring you don’t stop too soon. By tracking metrics like clicks, conversions, and sales across all variations, PIMMS provides a clear picture of performance while adhering to statistical rigor.

It also accounts for business cycles and seasonal trends when determining test durations. For instance, if your website’s performance fluctuates during specific days or months, PIMMS adjusts its recommendations to reflect these patterns. This ensures your test captures accurate and representative user behavior.

By integrating these automated tools, you can streamline your testing process and make more informed decisions.

Adding Testing Tools to Your Workflow

Incorporating tools like A/B test duration calculators and platforms such as PIMMS into your workflow can make your testing strategy more effective and reduce the chances of drawing premature conclusions.

Start by setting up a standardized process for all your A/B tests. Before launching a test, use a duration calculator to estimate how long it should run based on your traffic and expected improvement. This step not only helps you plan better but also sets realistic expectations with your team, avoiding pressure to wrap up tests too soon.

Take into account any business constraints that might affect your testing timeline, like upcoming product launches or seasonal campaigns [4]. For example, if a calculator suggests a four-week test but you only have two weeks before a major promotion, you may need to adjust your expectations for the minimum detectable improvement.

PIMMS integrates with platforms like Stripe, Shopify, and Zapier, enabling you to track the entire customer journey - from clicks to conversions. This level of tracking helps you evaluate not just which test variation performs better but also how it impacts your overall revenue.

Finally, keep an eye on external factors that could influence your test results, such as holidays, marketing campaigns, or industry events. While calculators provide a solid starting point, your business expertise and the insights from platforms like PIMMS will guide your final decisions on when to conclude a test.

Building a consistent approach with these tools and processes ensures more reliable results and creates a solid foundation for making optimization decisions.

Best Practices for A/B Test Duration

Now that you’ve nailed down the ideal duration and tools for your A/B testing, it’s time to focus on best practices that ensure your tests yield reliable and actionable insights. Running effective A/B tests takes both discipline and patience. These tips will help you sidestep common pitfalls that can lead to misleading results and poor decisions.

Wait for Statistical Significance

Statistical significance is your safeguard against making decisions based on random chance. It ensures that the differences you see between test variations are genuine and likely to hold up when the winning version is implemented [13]. Interestingly, a study analyzing 28,304 experiments found that only 20% reached the 95% significance level [12]. This highlights the importance of waiting for proper validation before making any conclusions.

To avoid skewed results, set your test duration upfront and resist the temptation to check results daily [13]. Checking too often increases the risk of false positives: peeking twice can more than double the error rate, while checking five or ten times can triple or quadruple it [11]. Another key step is calculating your sample size in advance. Predefine parameters like your baseline conversion rate, minimum detectable effect, and desired confidence level to avoid ending tests too soon based on misleading early trends [14].

Even after achieving statistical significance, let the test run its full course to capture all relevant data.

Don't Stop Tests Early

Cutting tests short can lead to unreliable outcomes and costly mistakes. Early results often swing wildly due to small sample sizes and don’t account for factors like business cycles, seasonality, or external events [3].

"When your experiments run for a short time, you run the risk of getting false positive results. Because your A/B test runtime was too short, it never accounted for variability in seasons, business cycle, holidays, weekdays, and other factors that influence customer behavior." [3]

Small sample sizes can distort results, as Lucia van den Brink, Lead Consultant at Increase Conversion Rate, explains:

"The more data you have the more accurate it gets. The first few days of an A/B test, the data fluctuates because there is not enough data yet. It might be that you have one customer buying 100 products in your variant. This outlier makes a greater impact on a small data set." [3]

Take, for example, an A/A test conducted by GrowthRock. Both variations were identical, yet after just 3,000 visitors per variation, a 30.4% difference in successful checkouts with 95% statistical significance was observed [15]. This underscores the importance of running tests for at least two business cycles (typically 2–4 weeks) to account for natural fluctuations in behavior [3].

At the same time, be mindful of external conditions that could influence your test results.

Track External Factors

External events like marketing campaigns, seasonal trends, holidays, or unexpected occurrences can heavily impact your test outcomes [16]. For example, Intuit found that tests showing a 10–20% lift in November produced no lift in April, even though the page content was identical. This dramatic change was due to the difference in user motivation between November (tax preparation season) and April (post-tax season) [16].

"Seasonality and other external factors impact tests a lot." – Peep Laja, Founder, CXL [16]

To minimize these effects, document major events such as product launches, marketing campaigns, or industry news, and segment your data by user type to spot shifts caused by these factors [17]. Additionally, avoid running tests during major marketing pushes to limit the influence of traffic spikes from skewed sources [3].

Conclusion: Plan Your A/B Tests for Success

Getting the timing right for your A/B tests starts with a solid plan that factors in traffic patterns, business cycles, and statistical needs. By laying this groundwork before launching, you set yourself up for reliable and actionable results.

Start by calculating your optimal sample size. Define your baseline conversion rate, the smallest effect you want to detect, and aim for a 95% confidence level. Use these inputs with a calculator to determine realistic timelines based on your traffic volume.

When planning, align your tests with at least two full business cycles to capture the complete range of user behavior. As Shiva Manjunath, Experimentation Manager at Solo Brands, emphasizes:

"True experiments should be running a minimum of 2 business cycles, regardless of how many variations." [3]

Be prepared for real-world factors that might extend your timeline, as discussed in the Best Practices section. Build in a buffer period to ensure you collect data for the full duration of your test before making decisions.

Tools like PIMMS can simplify this process by allowing you to set test end dates upfront. This feature helps you avoid the temptation to stop tests prematurely, ensuring you gather sufficient data. Plus, with real-time analytics, you can monitor progress without compromising your test's integrity.

It's worth remembering: quality data always outweighs the rush for quick answers. Taking the time to let your test run its course ensures your decisions are backed by complete and accurate insights. As Anurag Singh, PGDM in Marketing & Analytics, advises:

"A test has run long enough when you've reached statistical significance and have enough sample size... And, avoid stopping too early - even if one variation looks like it's winning." [18]

Approach each A/B test as a scientific experiment. Define your parameters, stick to your timeline, and trust the data to reveal the full picture.

FAQs

How do I determine the right sample size for my A/B test?

When setting up an A/B test, getting the sample size right is key to producing trustworthy results. This depends on factors like your current conversion rate and the smallest change you want to measure, also known as the minimum detectable effect. A common guideline suggests aiming for at least 30,000 visitors and 3,000 conversions per variant, though the exact numbers can shift based on your website traffic and specific objectives.

For a more accurate calculation, you can rely on statistical formulas or tools designed to estimate sample size. These take into account your baseline metrics and the confidence level you’re aiming for. It's crucial to let your test run long enough to collect adequate data - cutting it short can lead to unreliable conclusions and undermine the statistical significance of your results.

Why is it risky to stop an A/B test too soon, and how can I ensure accurate results?

Stopping an A/B test too soon can lead to misleading outcomes. Early results might just be random noise rather than a genuine performance difference, which could lead to poor decisions and ineffective changes.

To get reliable insights, it's crucial to let your test run long enough to account for statistical significance, seasonal trends, and external influences. A solid guideline is to run your test for at least 2–4 weeks or two business cycles, depending on your traffic volume and objectives. This timeframe helps ensure you're capturing meaningful data, including any delayed or long-term impacts of the test.

How do seasonal trends and business cycles affect the length and results of A/B tests?

Seasonal patterns and business cycles can have a big impact on how long A/B tests should run and the results they produce. To get dependable insights, it’s crucial to let tests run for a sufficient period - usually 2–4 weeks - to capture a complete business cycle. This timeframe helps smooth out temporary changes caused by things like holidays, marketing pushes, or other external events that might skew user behavior in the short term.

It’s also a smart move to conduct tests during steady periods, avoiding major seasonal spikes. Weekly or daily traffic patterns play a role too, so cutting a test short can lead to misleading conclusions. By letting the test run its full duration, you’ll ensure the data reflects meaningful trends rather than short-term blips.